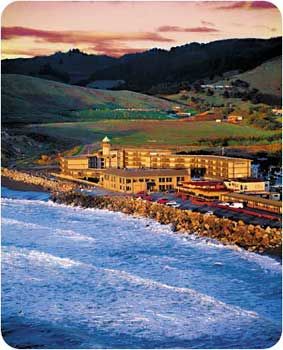

This year, CANDE will be held the evening of Thursday, Nov. 6 through Saturday, Nov, 8 ending in the early afternoon to allow return travel on the weekend. The location is the Lighthouse Hotel in Pacifica, CA. While a locally rural setting with a small artist town adjacent to the site, it is are short trip (18 miles) to San Francisco International Airport and allows easy access to the greater Bay area. As always, registrations are for the full conference, a special discount registration (not including additional lodging) is available for spouses or non-participating guests. Pacifica is a clement sea-side community with temperature averages between 10-18C (50-65F) with a small chance of rain in November. There are numerous walking and biking trails adjacent to the hotel as well as shopping and of course beach access within close walking distance. The Hotel Web site is:

https://www.bestwesternlighthouse.com/ and the Google Map URL is:

https://maps.google.com/maps?cid=0,0,14996598030813402332