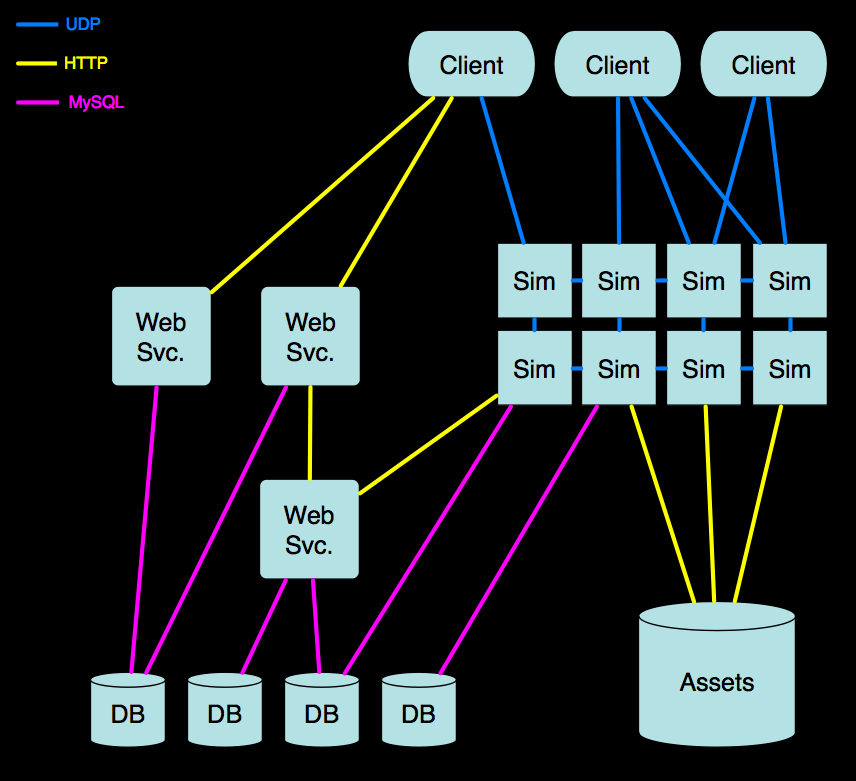

Behind the Scenes at Linden Lab

What is Second Life?

- An Online Virtual World

What is Second Life?

- 3D modeling tools

- Physics simulation

- Fully-featured scripting language

- L$ Currency Exchange (LindeX)

What is Second Life?

- But what do people do with it?

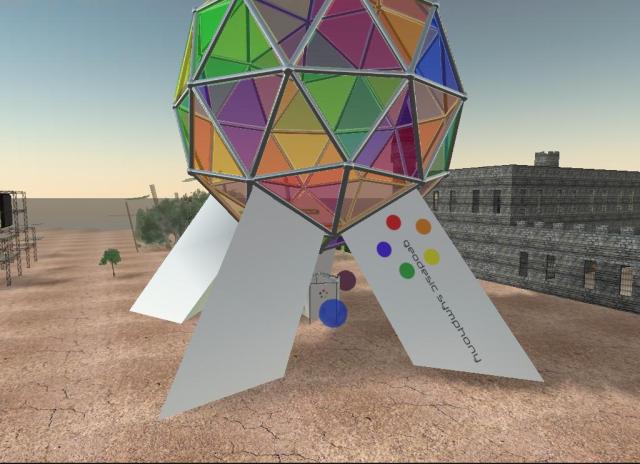

Creativity

Creativity

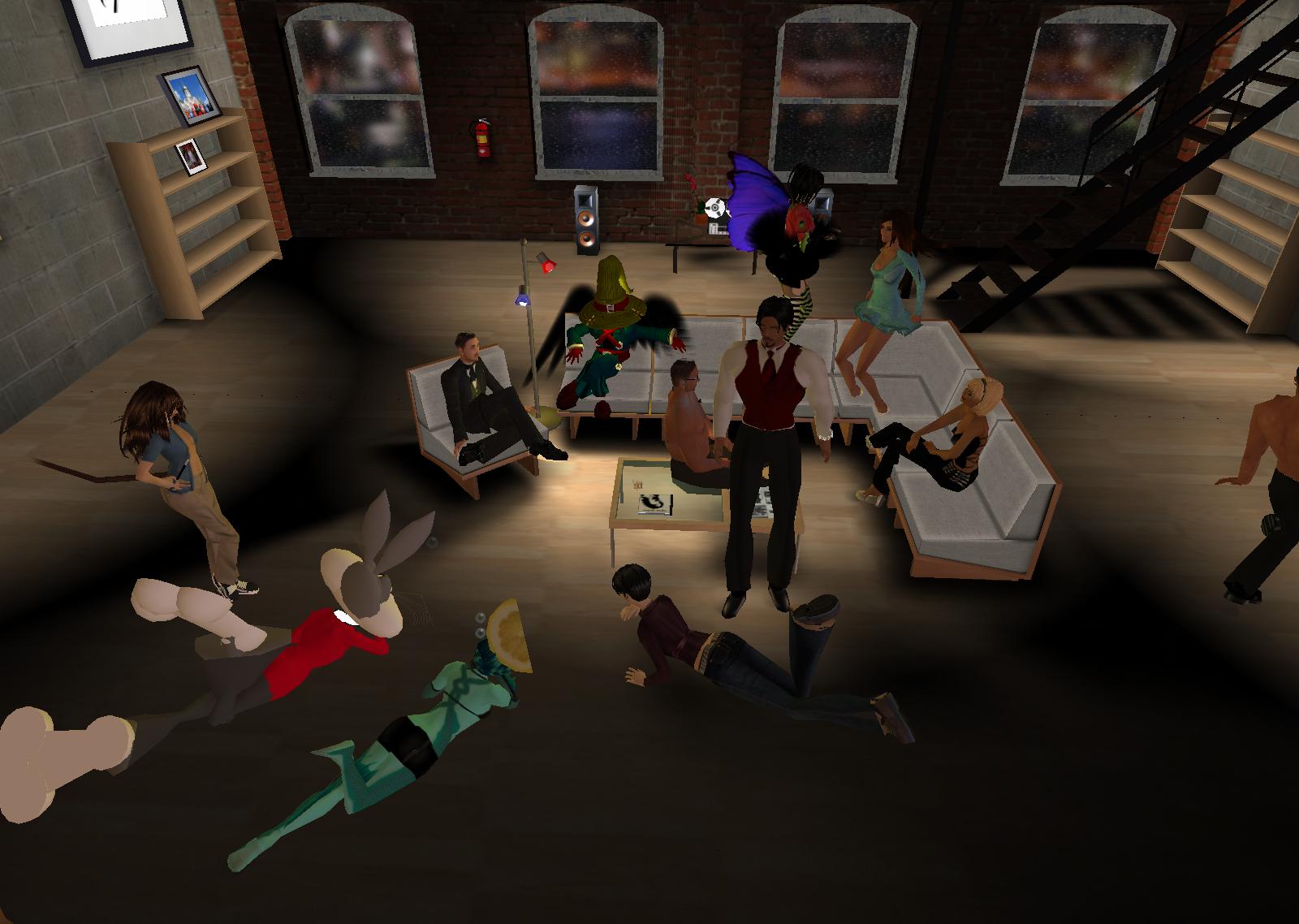

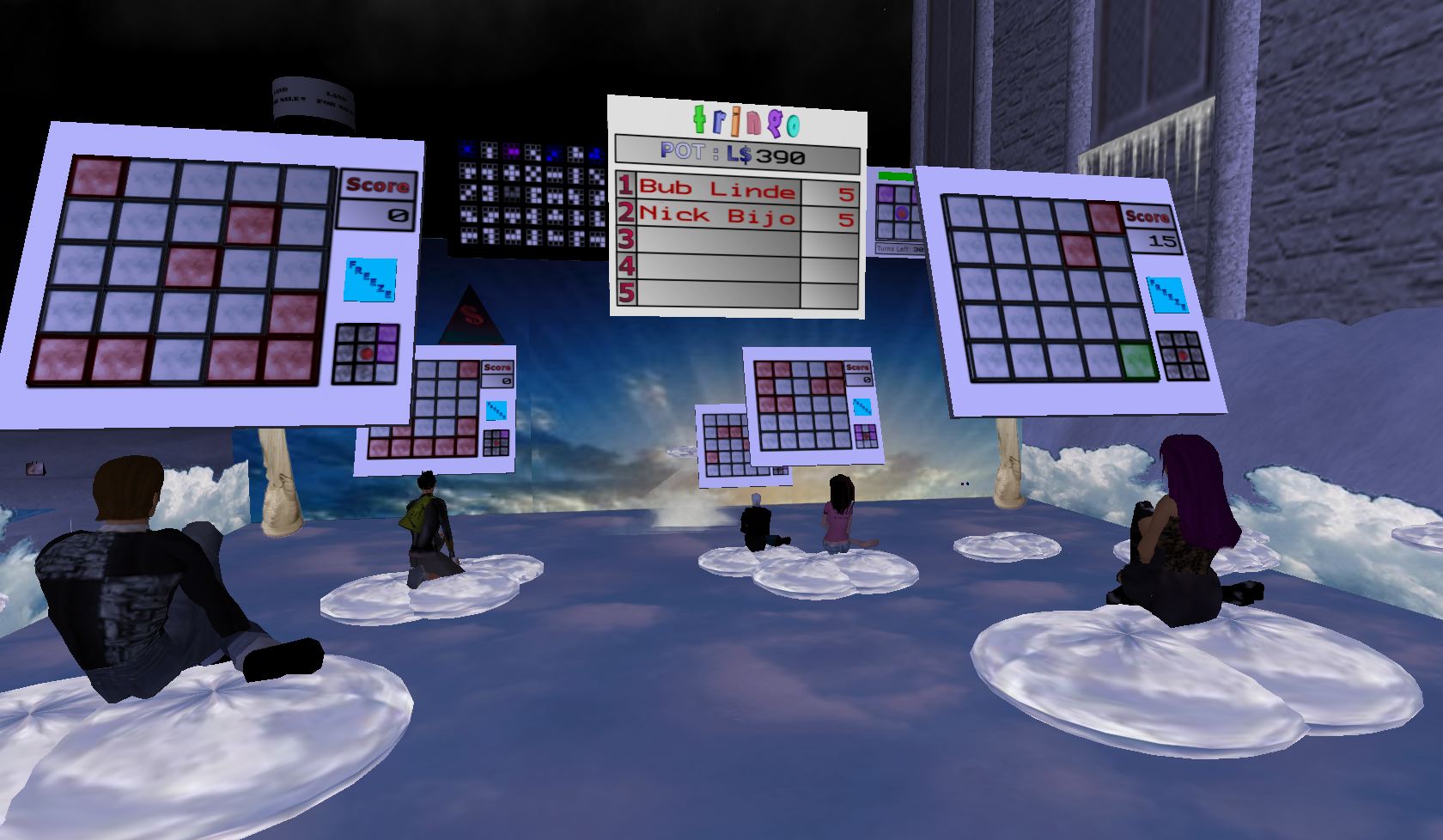

Socializing

Socializing

Socializing

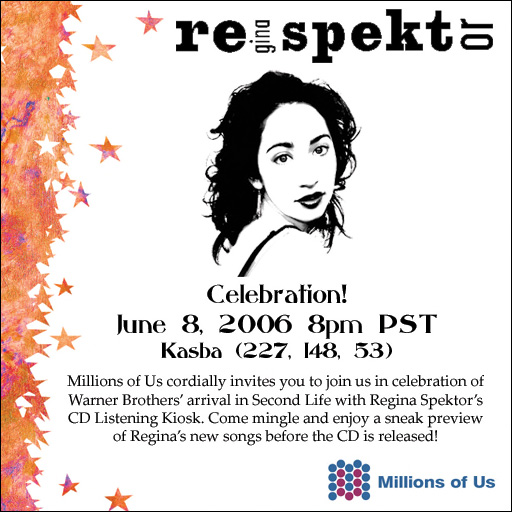

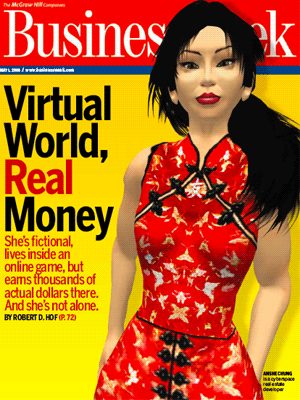

Business

Business

Business

The Early Years

- Founded in 1999 by Philip Rosedale, in San Francisco

- The inspirations:

- Snowcrash

- Previous Virtual Worlds

The Early Years

Why Then?

- Broadband was becoming ubiquitous.

- So was cheap consumer 3D hardware acceleration.

The Early Years

Before Beta

- Built the core technology and tools.

- Considered building content ourselves, but realized that costs were prohibitive.

- Give the tools to the users. They will build the world for us.

The Early Years

Launch

- Second Life was launched in June 2003

- A small core of dedicated users, but uptake was slow.

- Why?

The Early Years

Survival of the Fittest

- We had built a system by which you were taxed for resources, and were given L$ to buy resources depending on the quality of your content (from ratings).

- Elegant, but completely ruthless. Too difficult for people to understand - too much like work. If you weren't constantly creating newer, better content, your creations would get DELETED.

- Users got angry!

The Early Years

The Breakthrough

- Instead of limiting what people can do (like a game), allow people to pay for as many resources as they want to use!

- Make it possible for users to benefit financially by content that they create, by supporting a L$<->US$ exchange (GOM first, then Lindex)

Accelerating Growth

- Growth slowly starts to accelerate. This is good!

- 2004 and 2005 see steady growth, doubling around every 6 months. But then, in 2006...

Accelerating Growth

Account growth

- 3/06 - 150K accounts

- 6/06 - 300K accounts

- 9/06 - 735K accounts

- 10/18/06 - 1M accounts

- 12/14/06 - 2M accounts

- 1/28/07 - 3M accounts

- 2/24/07 - 4M accounts

SL Map: 2003