Plenaries and Keynotes

Plenaries and Keynotes wil be broadcast form June 1 to 15 at 1PM UTC on Big Screen and on IEEE.TV with live comments and Q&A (only available through ieee.tv).

| Plenary Panel | Chair: Wolfram Burgard | Monday, June 1, 1PM UTC |

|

Covid-19: Ken Goldberg, UC Berkeley, Moderator |

Robin Murphy, Texas A&M, USA |

Gangtie Zheng, Tsinghua U, PRC |

| Plenaries | ||

| Lydia E. Kavraki | Planning in Robotics and Beyond | Tuesday June 2, 1PM UTC |

| Yann LeCun | Self-Supervised Learning & World Models | Wednesday June 3, 1PM UTC |

| Jean-Paul Laumond | Geometry of Robot Motion: from the Rolling Car to the Rolling Man | Thursday June 4, 1PM UTC |

| Keynotes | ||

| Allison Okamura | Haptics for Humans in a Physically Distanced World | Monday June 8, 1PM UTC |

| Kerstin Dautenhahn | Human-Centred Social Robotics: Autonomy, Trust and Interaction Challenges |

Tuesday June 9, 1PM UTC |

| Pieter Abbeel | Can Deep Reinforcement Learning from pixels be made as efficient as from state? |

Wednesday June 10, 1PM UTC |

| Jaeheung Park | Compliant Whole-body Control for Real-World Interactions | Thursday June 11, 1PM UTC |

| Cordelia Schmid | Automatic Video Understanding | Friday June 12, 1PM UTC |

| Cyrill Stachniss | Robots in the Fields: Directions Towards Sustainable Crop Production |

Monday June 15, 1PM UTC |

| Toby Walsh | How long before Killer Robots? | Tuesday June 16, 1PM UTC |

| Hajime Asama | Robot Technology for Super Resilience - Remote Technology for Response to Disasters, Accidents, and Pandemic | Wednesday June 17, 1PM UTC |

Plenary Speakers

Keynote Speakers

Pieter Abbeel,

|

Can Deep Reinforcement Learning from pixels be made as efficient as from state? |

| Chair: Stéphane Régnier | Wednesday June 10, 1PM UTC |

|

Learning from visual observations is a fundamental yet challenging problem in reinforcement learning. Although algorithmic advancements combined with convolutional neural networks have proved to be a recipe for success, it's been widely accepted that learning from pixels is not as efficient as learning from direct access to underlying state. In this talk I will describe our recent work that (almost entirely) bridges the gap in sample complexity between learning from pixels and from state, as empirically validated on the DeepMind Control Suite and Atari games. In fact, I will present two new approaches establishing this new state of the art: Reinforcement Learning with Augmented Data (RAD) and Contrastive Unsupervised Representations for Reinforcement Learning (CURL). At the core of both are data augmentation through random crops. Our approaches outperform prior pixel-based methods, both model-based and model-free, on complex tasks in the DeepMind Control Suite and Atari Games showing 1.9x and 1.6x performance gains at the 100K environment and interaction steps benchmarks respectively. |

| Professor Pieter Abbeel is Director of the Berkeley Robot Learning Lab and Co-Director of the Berkeley Artificial Intelligence (BAIR) Lab. Abbeel’s research strives to build ever more intelligent systems, which has his lab push the frontiers of deep reinforcement learning, deep imitation learning, deep unsupervised learning, transfer learning, meta-learning, and learning to learn, as well as study the influence of AI on society. His lab also investigates how AI could advance other science and engineering disciplines. Abbeel's Intro to AI class has been taken by over 100K students through edX, and his Deep RL and Deep Unsupervised Learning materials are standard references for AI researchers. Abbeel has founded three companies: Gradescope (AI to help teachers with grading homework and exams), Covariant (AI for robotic automation of warehouses and factories), and Berkeley Open Arms (low-cost, highly capable 7-dof robot arms), advises many AI and robotics start-ups, and is a frequently sought after speaker worldwide for C-suite sessions on AI future and strategy. Abbeel has received many awards and honors, including the PECASE, NSF-CAREER, ONR-YIP, Darpa-YFA, TR35. His work is frequently featured in the press, including the New York Times, Wall Street Journal, BBC, Rolling Stone, Wired, and Tech Review. | |

Hajime Asama,

|

Robot Technology for Super Resilience

|

| Chair: Wolfram Burgard | Wednesday June 17, 1PM UTC |

|

Utilization of remote-controlled machine technology including robot technology is essential for the response against disasters, accidents, and pandemic to accomplish various tasks in a hazardous. In this presentation, the robot technology required to realize resilient facilities, cities and society against disasters and accidents is discussed. A new concept of “Super Resilience”, which means evolution of facilities, cities and society through experience of past disasters and accidents, and “Wa (harmony): Control for solving Societal Problems and Creating Societal Values” are introduced, focusing on robot technology. |

| Hajime Asama received his B. S., M. S., and Dr. Eng in Engineering from the University of Tokyo, in 1982, 1984 and 1989, respectively. He worked at RIKEN (Institute of Physical and Chemical Research) in Japan from 1986 to 2002 as a research scientist, etc. He became a professor of RACE (Research into Artifacts, Center for Engineering) of the University of Tokyo in 2002, and a professor of School of Engineering of the University of Tokyo since 2009. He received SICE (The Society of Instrument and Control Engineers) System Integration Division System Integration Award for Academic Achievement in 2010, RSJ (Robotics Society of Japan) Distinguished Service Award in 2013, JSME Award (Technical Achievement) in 2018, etc. He was the vice-president of RSJ in 2011-2012, an AdCom member of IEEE Robotics and Automation Society in 2007-2009. Currently, he is the president-elect of IFAC since 2017, the president of International Society for Intelligent Autonomous Systems since 2014, and an associate editor of Control Engineering Practice, Journal of Robotics and Autonomous Systems, and Journal of Field Robotics, etc. He is a member of Science Council of Japan from 2014 to 2017, and a council member since 2017. He is a Fellow of IEEE, JSME and RSJ. |

|

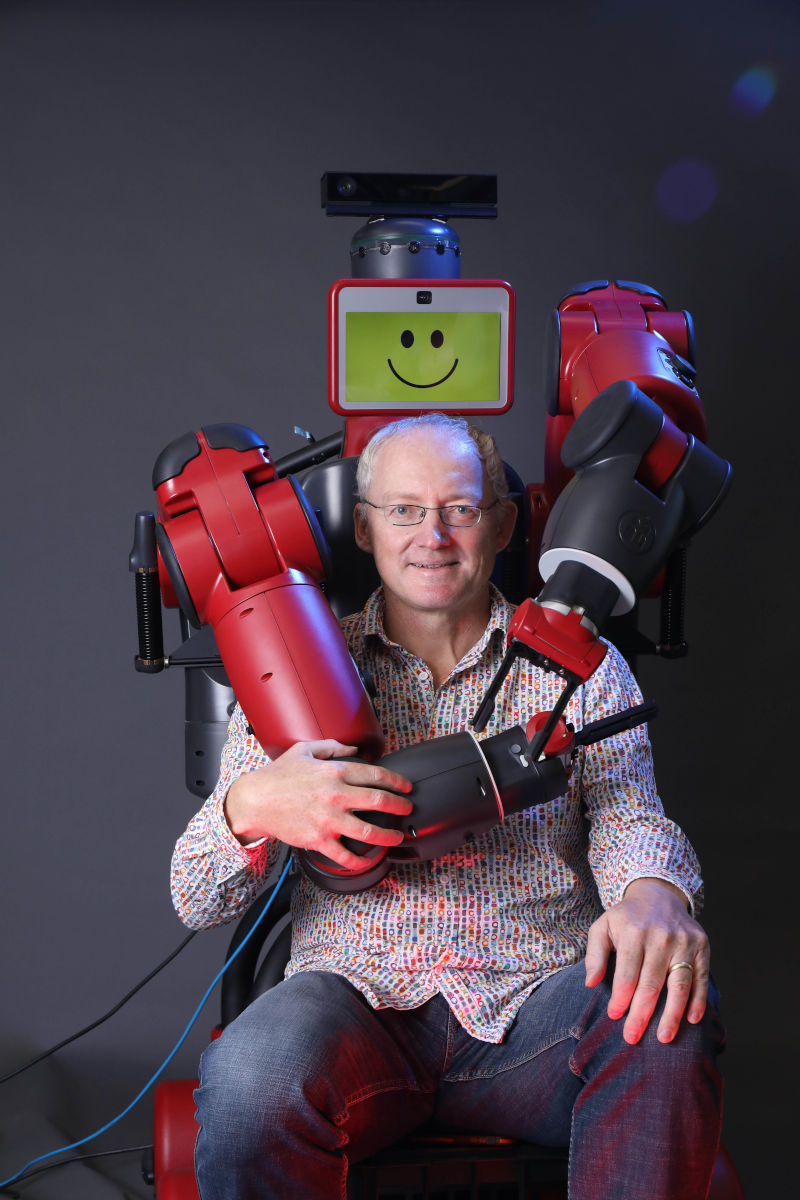

Kerstin Dautenhahn,

|

Human-Centred Social Robotics:

|

| Chair: Mohamed Chetouani | Tuesday June 9, 1PM UTC |

|

Social robots that interact with people have been studied extensively over the past 20 years. Such research requires interdisciplinary approaches that cover areas such as engineering, robotics, computer science, as well as psychology, social sciences and other fields. I have been involved in multiple projects over the past 25 years aimed at developing social robots that can interact “naturally” with people, i.e. meeting people’s expectations on what it means to interact with a robotic “social entity”. This involved fundamental research, as well as application-oriented research. I’m particularly interested in application areas where robots are not replacing people, can be used as efficient tools complementing the human skills and providing an “added value”. These application areas include therapy and education for children and adults, as well as support and assistance for older people who live in their own homes or in care homes. In my keynote talk I will briefly introduce some issues that are key in those domains, in particular with respect to robot autonomy, people’s trust (and the danger of overtrust) in robots, and challenges in how to create useful, enjoyable, safe and non-judgemental interactions between people and robots. |

| Professor Dr Kerstin Dautenhahn is Canada 150 Research Chair in Intelligent Robotics at University of Waterloo in Ontario, Canada. She has a joint appointment with the Departments of Electrical and Computer Engineering and Systems Design Engineering and is cross-appointed with the David R. Cheriton School of Computer Science at University of Waterloo. In Waterloo she is director of Social and Intelligent Robotics Research Laboratory (SIRRL). The main areas of her research are Human-Robot Interaction, Social, Cognitive and Developmental Robotics, Assistive Technology and Artificial Life. Before she moved to Canada in 2018 she developed and coordinated the Adaptive Systems Research Group at University of Hertfordshire, United Kingdom, which she is still affiliated with. In 2019 she was elevated to IEEE Fellow for her contributions in Human-Robot Interaction and Social Robotics. She is life-long Fellow of AISB (The Society for the Study of Artificial Intelligence and Simulation of Behaviour) and one of the two Editors in Chief of the Journal Interaction Studies- Social Behaviour and Communication in Biological and Artificial Systems published by John Benjamins Publishing Company, Editorial Board Member of Adaptive Behavior, Sage Publications, Associate Editor of the International Journal of Social Robotics, published by Springer, and Associate Editor of IEEE Transactions on Cognitive and Developmental Systems. She is also an Editor of the book series Advances in Interaction Studies, published by John Benjamins Publishing Company. | |

Allison Okamura,

|

Haptics for Humans in a Physically Distanced World |

| Chair: Sinan Haliyo | Monday, June 8, 1PM UTC |

|

Physical contact is a key means for humans to interact with each other – to teach, provide physical assistance, and convey emotions. When humans are separated from each other, robots and haptic devices enable essential physical contact that is both safe and functional. In this talk, I will address haptic communication between humans and robots, humans and virtual agents, and humans and humans through novel wearable haptic devices that enable communication in a salient but private manner that frees other sensory channels. For such devices to be ubiquitous, they must be intuitive and unobtrusive. The amount of information that can be transmitted through touch is limited in large part by the location, distribution, and sensitivity of human mechanoreceptors. Not surprisingly, many haptic devices are designed to be held or worn at the highly sensitive fingertips, yet stimulation using a device attached to the fingertips precludes natural use of the hands. Thus, we explore the design of a wide array of haptic feedback mechanisms, ranging from devices that can be actively touched by the fingertips to multi-modal haptic actuation mounted on the arm. We demonstrate how these devices are effective in virtual reality, human-robot communication, and human-human communication. |

| Allison M. Okamura received the BS degree from the University of California at Berkeley and the MS and PhD degrees from Stanford University, all in mechanical engineering. She is currently Professor in the mechanical engineering department at Stanford University, with a courtesy appointment in computer science. She is an IEEE Fellow and Editor-in-Chief of the journal IEEE Robotics and Automation Letters. Her awards include the 2019 IEEE Robotics and Automation Society Distinguished Service Award, 2016 Duca Family University Fellow in Undergraduate Education, 2009 IEEE Technical Committee on Haptics Early Career Award, 2005 IEEE Robotics and Automation Society Early Academic Career Award, and 2004 NSF CAREER Award. Her academic interests include haptics, teleoperation, virtual environments and simulators, medical robotics, neuromechanics and rehabilitation, prosthetics, and engineering education. Outside academia, she enjoys spending time with her husband and two children, running, and playing ice hockey. For more information about her research, please see the Collaborative Haptics and Robotics in Medicine (CHARM) Laboratory website: https://charm.stanford.edu. | |

Jaeheung Park,

|

Compliant Whole-body Control for Real-World Interactions |

| Chair: Raja Chatila | Thursday June 11 |

|

As more complicated robots such as humanoid robots are expected to operate in complex environments, whole-body control has greatly progressed for robots operating in multiple contact scenarios. Now, various approaches can be used to create the compliant whole-body motion of robots, depending on hardware and control methods. In this talk, I would like to share my experience of implementing compliant whole-body motion control. The first one is on a torque-controlled robot using a whole-body controller based on the operational space control framework, and the second one is on a position-controlled robot by creating compliant motion using disturbance estimation. Implementation issues will be also discussed in these approaches. Finally, other exciting work in our research group will be briefly presented. |

| Jaeheung Park is a Professor in the Department of Transdisciplinary Studies at Seoul National University, South Korea, since 2009. Prior to joining Seoul National University, he shortly worked at Hansen Medical Inc, a medical robotics company. He received the Ph.D. degree from Stanford University, and the B.S. and M.S. degrees from Seoul National University. His research group is currently conducting many national projects on the topics of humanoid robots, rehabilitation/medical robots, and autonomous vehicles. He was a team leader of TEAM SNU for DRC Finals 2015 (DARPA Robotics Challenge Finals), which was a robotics competition for disaster response. In 2016, His team won the award given by Minister of Science, ICT, and Future Planning, at Challenge Parade that was held by the Korean Government. He has participated in organizing many international robotics conferences such as IROS, HUMANOIDS, and HRI. He has also served as a Conference Editorial Board or as an Associate Editor for many international conferences. He currently serves as a co-chair of the RAS Technical Committee on Whole-body Control. | |

Cordelia Schmid,

|

Automatic Video Understanding |

| Chair: Wolfram Burgard | Friday June 12, 1PM UTC |

|

Recent progress in deep learning has significantly advanced the performance in understanding actions in videos. We start by presenting an approach for localizing spatio-temporally actions. We describe how action tublets result in state-of-the-art performance for action detection and show how modeling relations with objects and humans further improve the performance. Next we introduce an approach for behavior prediction for self-driving cars. We conclude by giving results how to use multi-modals information in video understanding. |

| Cordelia Schmid holds a permanent researcher position at Inria since 1997, where she is a research director. Starting 2018 she has a joint appointment with Google research. She has published more than 300 articles, mainly in computer vision. She has been editor-in-chief for IJCV (2013--2018), a program chair of IEEE CVPR 2005 and ECCV 2012 as well as a general chair of IEEE CVPR 2015, ECCV 2020 and ICCV 2023. In 2006, 2014 and 2016, she was awarded the Longuet-Higgins prize for fundamental contributions in computer vision that have withstood the test of time. She is a fellow of IEEE. She was awarded an ERC advanced grant in 2013, the Humboldt Research Award in 2015 and the Inria & French Academy of Science Grand Prix in 2016. She was elected to the German National Academy of Sciences, Leopoldina, in 2017. In 2018, she received the Koenderink Prize for fundamental contributions in computer vision. She received the Royal Society Milner award in 2020. | |

Cyrill Stachniss,

|

Robots in the Fields:

|

| Chair: Torsten Kröger | Monday June 15, 1PM UTC |

|

Food, feed, fiber, and fuel: Crop farming plays an essential role for the future of humanity and our planet. We heavily rely on agricultural production and at the same time, we need to reduce the footprint of agriculture production: less input of chemicals like herbicides and fertilizer and other limited resources. Simultaneously, the decline in arable land and climate change pose additional constraints like drought, heat, and other extreme weather events. Robots and other new technologies offer promising directions to tackle different management challenges in agricultural fields. To achieve this, autonomous field robots need the ability to perceive and model their environment, to predict possible future developments, and to make appropriate decisions in complex and changing situations. This talk will showcase recent developments towards robot-driven sustainable crop production. We will illustrate how certain management tasks can be automized using UAVs and UGVs and which new ways this technology offers. Among work conducted in collaborative European projects, the talk covers ongoing developments of the Cluster of Excellence “PhenoRob - Robotics and Phenotyping for Sustainable Crop Production” and some of our current exploitation activities. |

| Cyrill Stachniss is full professor at the University of Bonn since 2014 and heads the Photogrammetry and Robotics Lab. Before being appointed in Bonn, he was a lecturer at the University of Freiburg as well as with the Swiss Federal Institute of Technology. The research activities of the Photogrammetry and Robotics Lab focus on probabilistic techniques in the context of mobile robotics, navigation problems, and visual perception. The lab has made several contributions in the area of SLAM, localization, place recognition, autonomous exploration and planning, semantic interpretation and covers ground vehicles as well as UAVs. The two main application areas of the lab are agricultural robotics and autonomous cars. Cyrill Stachniss has coauthored over 220 scientific publications, he was senior editor of the IEEE Robotics and Automation Letters as well as associate editor of the IEEE Transactions on Robotics. He is a Microsoft Research Faculty Fellow and received the IEEE RAS Early Career Award in 2013. He is actively participating in German and European research projects. He is spokesperson of the DFG Cluster of Excellence “PhenoRob - Robotics and Phenotyping for Sustainable Crop Production” at the University of Bonn. Furthermore, he acted as coordinator of the EC-funded project “ROVINA” for digitizing the Roman catacombs with robots and as the spokesperson of the DFG research unit “Mapping on Demand”. Besides his university involvement, he co-founded three startups: Escarda Technologies, DeepUp, and PhenoInspect. | |

Toby Walsh |

How long before Killer Robots? |

| Chair: Raja Chatila | Tuesday June 16 |

|

In 2007, Noel Sharky stated that "we are sleepwalking into a brave new world where robots decide who, where and when to kill". Since then thousands of AI and robotics researchers have joined his calls to regulate "killer robots". But sometime this year, Turkey will deploy fully autonomous home-built kamikaze drones on its border with Syria. What are the ethical choices we need to consider? Will we end up in an episode of Black Mirror? Or is the UN listening to calls and starting the process of regulating this space? Prof. Toby Walsh will discuss this important issue, consider where we are at and where we need to go. In 2015, he helped launch an open letter calling on the UN to regulate fully autonomous weapons that made headlines around the world. Since then, he has spoken multiple times at the UN in New York and Geneva, and to politicians and the public around the world about these important issues. |

| Toby Walsh is Scientia Professor of Artificial Intelligence at the University of New South Wales and Data61, guest professor at the Technical University of Berlin, and adjunct professor at QUT. He was named by the Australian newspaper as one of the "rock stars" of Australia's digital revolution. Professor Walsh is a strong advocate for limits to ensure AI is used to improve our lives. He has been a leading voice in the discussion about autonomous weapons (aka "killer robots"), speaking at the UN in New York and Geneva on the topic. He is a Fellow of the Australia Academy of Science and recipient of the NSW Premier's Prize for Excellence in Engineering and ICT. He appears regularly on TV and radio, and has authored two books on AI for a general audience, the most recent entitled "2062: The World that AI Made". |